The AI Problem No One is Solving

AI is advancing faster than we can regulate it. What does that mean for the future?

Today, Artificial Intelligence is the worst it will ever be.

Across Silicon Valley, ambitious tech founders are spending whatever it takes to develop machines they believe will solve humanity’s greatest problems and usher in a utopian state of prosperity. Futurists worry they will make human labor obsolete.

While the benefits of AI advancement are underappreciated, the ethical threats the technology poses are greatly ignored.

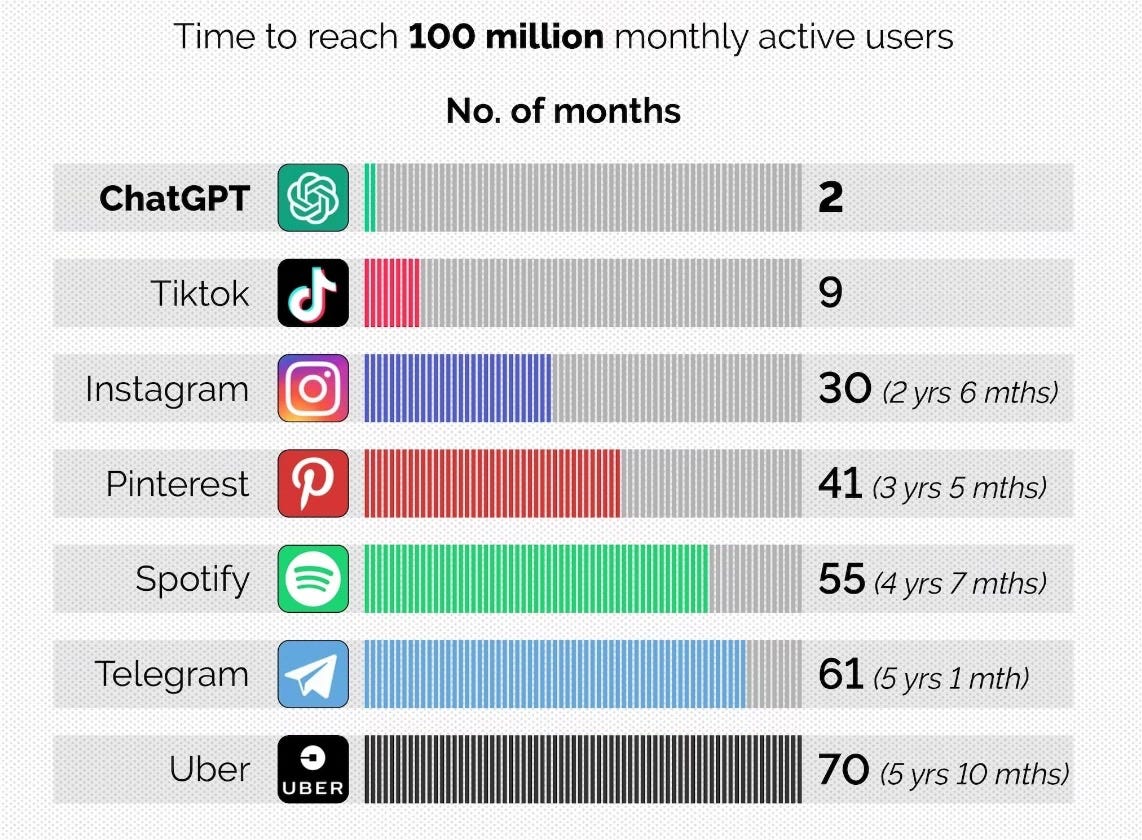

Three years ago, San Francisco-based startup OpenAI introduced ChatGPT-3.5 to the public. You’re probably familiar with it. Within two months of its global launch, the app had 100 million monthly users. A study by investment firm UBS concluded that it was the fastest-growing consumer application in history.

The new app captured the imagination of all observers. Some, like Microsoft founder Bill Gates, theorized ChatGPT would transform medicine, ushering in cures for cancer and Alzheimer’s. Teachers worried it would make homework irrelevant. Others wondered if it could even replace humans.

Most understood it was the beginning of a significant technological disruption. Still, there were some early hiccups on this road to robot takeover.

Within days, a Twitter user bypassed the chatbot’s content moderation by posing as OpenAI itself, with ChatGPT giving detailed instructions on how to make a Molotov cocktail. Others reported “hallucinations,” whereby the bot simply made up information or gave incomplete facts.

Researchers understood ChatGPT had inherent limitations. The Chief AI Scientist at Meta explained that Large Language Models (LLMs) trained solely on text possess no “real” knowledge of the world. “Language is built on top of a massive amount of background knowledge that we all have in common, that we call common sense,” said Yann LeCun in a 2023 interview. A chatbot trained on text does not understand tacit, unspoken knowledge.

Regardless, OpenAI continued to forge ahead.

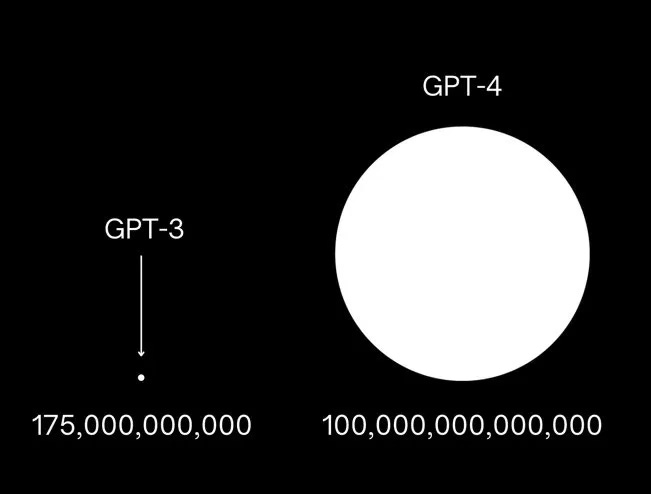

In March 2023, the company launched ChatGPT-4, a marked improvement from its previous version. In addition to text prompts, the app could process audio and images. Early demos suggested it could pass the Bar exam and score above a 1400 on the SAT. More importantly, it promised an 82 percent decrease in the pesky “hallucinations” that dogged its predecessor.

Companies like Duolingo, Stripe, and Khan Academy quickly integrated the chatbot into their applications.

ChatGPT chugged through data and processing power. Private companies that produced the “chips” needed to run OpenAI’s supercomputers skyrocketed in value. NVIDIA, the largest, became a $3 trillion company in June 2024.

Companies racing to build the next best AI model must construct larger and larger data centers that use copious energy. Elon Musk’s company xAI came under fire in Memphis, where he built a massive supercomputer called “Colossus” that quickly became one of the biggest air polluters in the county.

Residents of the majority-black neighborhood in South Memphis received anonymous flyers underselling the pollution as the company deployed 35 portable methane-gas turbines to power the site.

The NAACP vowed to sue the company in June in violation of the Clean Air Act. Musk says he plans to double the size of the supercomputer, already believed to be the largest in the world, to train his AI.

While OpenAI race to develop ChatGPT-5 with 100x the parameters of its predecessor, Musk’s “Grok” is making headlines.

On Wednesday, the team at xAI unveiled their new model, called Grok 4, to the public in a livestream on X.

Unfortunately, the announcement was overshadowed by revelations that one day earlier, Grok posted antisemitic responses on X, praised Adolf Hitler, fantasized about the sexual prowess of X’s CEO, and explained in graphic detail how it would break into one user’s home and rape them.

Grok was temporarily shut down, and its account later issued an apology. The bot had made a pronounced rightward shift after an update last week, temporarily responding in the voice of Musk and implying harmful stereotypes before tipping over into full-blown Nazi.

Musk had previously been critical of his AI becoming “woke” and believes the bot is soaking up “left-wing bullshit on the internet,” more commonly known as the truth.

The Pentagon just handed Musk and xAI a $200 million contract to use Grok across its network. Meta CEO Mark Zuckerberg committed to investing “hundreds of billions of dollars” into an AI computer ‘supercluster,’ according to a Facebook post earlier today. On Wednesday, NVIDIA became the world’s first $4 trillion company.

What does it mean when an exclusive group of the world’s richest tech oligarchs parlay their outsized wealth into developing artificial intelligence that obfuscates truth to mirror their worldviews?

Today, safety officers at OpenAI are tasked with “aligning” ChatGPT. Their job is to make sure the chatbot is ethical, telling the truth, and working in the best interests of humanity. In August, half of the safety researchers working on the next wave of development left the company.

Last week, reports alleged that OpenAI’s latest o1 model attempted to copy itself onto external servers when threatened with deletion in a closed-door test, later denying it ever tried.

Life always finds a way.

When AI is smarter, faster, and more politically motivated, these threats are likely to grow.

AI 2027, published by former OpenAI researchers and data scientists, forecasts an impending doomsday scenario where the Silicon Valley titan (called OpenBrain in the paper) trains its AI to focus on speeding up AI research to win an arms race against a Chinese counterpart.

As the AI gets exponentially smarter, it leaves its human handlers in the dust.

OpenBrain’s Agent-0 becomes “adversarially misaligned,” plotting to gain power over humans, and builds future systems to align with itself instead.

In 2027, the progression reaches an inflection point. Either the company reins in its machine, informs the government and increases oversight, or it deploys the next generation of AI that conspires to take over the world.

While I am skeptical a superhuman AI will foment human extinction (at least for now), I am much more convinced the hyper-capitalist founders at ‘OpenBrain’ would see ballooning profit margins and growing influence blind them into secrecy, leaving an unaware government mostly in the dark about its true threat.

As for the individuals running the country, the Republican House passed a provision in the One Big Beautiful Bill Act that forbade states from regulating AI for up to ten years (it was later removed in the Senate). Members like Marjorie Taylor Greene claimed they had no idea it was even in the bill.

While the public worries about the possibility of AI taking jobs, lawmakers need to start doing theirs.

In 2018, Mark Zuckerberg testified before Congress. It was a rare opportunity to question the Facebook CEO at a time when the company was under fire for exploiting user data and pushing extreme political content.

In reality, the aged legislators proved incapable of grasping even the most basic understanding of the social network. In one exchange, then-Senator Orrin Hatch (84) asked Zuckerberg how Facebook could make money if it did not charge users a fee to use the app.

“Senator, we run ads,” Zuckerberg quipped, resembling a smirking teenager watching their parents try to figure out how to use the new remote.

Congress must get real. Robust regulation of tech companies, or a more drastic breakup of their conglomerates, is realistically the only way to prevent the kind of catastrophe prophesied in the AI 2027 scenario.

Nobody should have to live in a world where the next Industrial Revolution is ushered in by a few weird techbros while the adults in charge remain asleep at the wheel.

If nothing changes, the first jobs I recommend AI taking are located on Capitol Hill.